Signal characterization of the controller output and input were used to cancel valve and orifice nonlinearity, respectively. Many of these PID techniques were refined for boiler control. Later, signal characterization was also being applied to the controller input to cancel out the nonlinearities of the process variable for neutralizer pH and distillation column temperature control. A whole host of supervisory control techniques were programmed into a host computer. An experienced engineer could employ all sorts of tricks, but each function generally required a separate device, and the tuning and maintenance of these were often too tricky for even the most to accomplished personnel.

Furthermore, the degree of advanced control was set during the project estimation stage because adaptive, batch, feedforward, error squared, nonlinear, override, ratio and supervisory PID controllers had different model numbers, wiring and price tags. If you changed your mind, it meant buying a new controller and scrapping an existing one.

Signal characterization, selection, and calculations required separate boxes and special scaling factors. Only the strong survived. Leftover SAMA block and wiring diagrams and pot settings are the source of headaches to this day.

So Many Choices, So Little Time

In the 80s, the PID hangovers from the 70s became available as function blocks that could be configured at will within the basic process control system. Real-time simulations were developed to test configurations and train operators. The benefits from advanced regulatory control, instrument upgrades, and migration from analog to distributed control far exceeded expectations. Continuous process control improvement became a reality.

Meanwhile, advanced process control (APC) technologies including constrained multivariable predictive control (CMPC), artificial neural networks (ANN), real-time optimization (RTO), performance monitoring and expert systems were commercialized. These new technologies required expensive software packages ($100,000 and up), separate computers, special interfaces, and consultants to do the studies and implementation. The total bill could easily approach or exceed $1 million for a medium-sized project, the biggest chunk being the consultant's fee.

Add to that the fact that the process knowledge needed to not just exploit the system effectively but maintain it disappeared when the consultants left the site. Even so, the incremental benefits from advanced multivariable control and global online optimization over advanced regulatory (PID) control were huge, and enough to justify an extensive deployment as documented in benchmarking studies1.

APC Integration

At the turn of the century, APC technologies were integrated into the basic process control system2. Along with lower license fees, the whole cost of system implementation decreased by a factor of 20 or more with the automation of a variety of steps including configuration, displays, testing, simulation and tuning.

For example, an adaptive control (ADAPT), a fuzzy-logic controller (FLC), a model-predictive controller (MPC), and an artificial neural network (ANN) can be graphically configured and wired as simply as a PID function block. Figure 1 shows how an MPC is set up to maximize feed and an ANN is used to provide an online estimator of pH. A right-click on the MPC block offers the option to create the display for engineering that in turn, has buttons for automated testing and identification of the model for the MPC block.

Figure 1: A Maximum Feed Set Up

MPC and ANN applications are now as easy to configure as a PID.

Similarly, a right click on the ANN block would offer an engineering display for an automated sensitivity analysis, insertion of delays and training for the ANN block. Model verification is also available to compare the predicted versus the actual response of the MPC and ANN blocks and applications can be launched for performance monitoring and simulation of all blocks.

Now that we have the tools at our finger tips, how do we make the most out of the control opportunities the technologies can deliver?

Feel the Power

The speed at which new APC techniques can now be applied is truly incredible. In the time it took to read this article, an APC block can be been configured. Rapid APC can rejuvenate and empower you to take the initiative and become famous by Friday. Instead of wasting time arguing the relative merits of an APC solution, it can be prototyped via simulation and demonstrated via implementation. Nothing melts resistance to change more than success. Of course, it is still best that the application drive the solution and that a pyramid of APC technologies be built in layers on a firm foundation. APC is not a fix for undersized or oversized valves and stick-slip3.

To find the opportunities with the biggest benefits, an opportunity sizing is used to identify the gaps between peak and actual performance1, 2. The peak can be the best demonstrated from an analysis of cost sheets and statistical metrics. Increasingly, high-fidelity process simulations and virtual plants are used to explore new operating regions that are then verified by plant tests.

Big Opportunity

Perhaps the biggest opportunity for driving the application of APC is the development of online process performance indicators. Systems can now take online loop performance monitoring to an extraordinary level but the proof in the pudding is in how these metrics affect the process and what are the incremental benefits achieved and still possible.

The key variable for process performance monitoring is the ratio of the manipulated flow to the feed flow. The controlled variable is best expressed and plotted as a function of this ratio. For example, pH is a function of the reagent-to-feed ratio, column temperature is a function of the reflux-to-feed ratio, exchanger temperature is a function of the coolant-to-feed ratio, and stack oxygen is a function of the air-to-fuel ratio.

The process gain, which is the slope of the curve, is usually quite nonlinear. In the absence of first principle simulations, an ANN can be trained to predict the controlled variable from ratios and temperatures. For pH control, the inputs to the ANN are the ratios for all reagents and temperature, which affects the dissociation constants and activity coefficients. The future value of the ANN, which is the output, without the input delays used to compare and correct the ANN with a measured value, can be filtered and used to achieve much tighter control since dead time has been eliminated. The ANN future value can also be used to train a second ANN to predict a key ratio from a controlled variable.

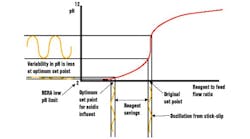

After training, achieved by ramping a key ratio to the first ANN to cover the operating range, a more optimum setpoint based on a reduction in variability is used as the input to the second ANN and offers the means to predict the associated ratio. Note that it is the variability in the ratio that predicts how much the setpoint can be shifted. This is best seen in pH control where the variability in the ratio from a setpoint on the steep curve can translate to a significant, acceptable shift in the pH setpoint to the flatter portion of the titration curve, and a huge savings in reagent cost as shown in Figure 2.

Figure 2: Big Savings

Analysis of variability in ratio enables a huge reagent savings.

The predicted benefit from improved process control is the difference between the present and the predicted and more optimum ratio, multiplied by the feed rate and cost per pound of the manipulated flow. For columns, reflux-to-feed ratio is converted to steam-to-feed ratio to calculate energy cost. Ultimately, past and future benefits of APC can be trended to show behavior not recognized from just the display of numbers.

A Virtual Prediction

A virtual plant can be run to provide a more accurate prediction of controlled variables and benefits if the model parameters such as dissociation constants, heat transfer coefficients,and tray efficiencies have been adapted to match the plant4. A novel method has been developed to use MPC to simultaneously adapt multiple first-principle process model parameters2. The targets for the MPC are the plant measurements, the controlled variables (in this case the corresponding virtual plant variables), and the manipulated variables which are the key simulation parameters. The MPC models for adapting the virtual plant are readily developed by running the dynamic simulations offline faster than real time. The identification of the MPC models is done non-intrusively and the adaptation of the virtual plant just requires the ability to read some key plant measurements2.

Get it On

It used to be that if one were a MPC or ANN supplier or consultant, everything was either an MPC or ANN solution and never shall the twain meet. Now process engineers can choose the solution that best fits the application based on dynamics and interaction.

Table 1 summarizes a consensus of the author's first choices for APC process control techniques and online property estimators. It is important to realize that there is considerable overlap in the ability of each APC technique and the quality of the set up and tuning is often more of a significant factor than the technology. Also, there are still some PID experts that can make a PID "stand on its head." These tables are not meant to rule out a technology but to help a person without any set technical predispositions to get started. Consider sampling technologies from several columns when you start developing your next project.

In the table, under the category column, A through G show processes dominated by dead time, lag time, runaway response, nonlinearities, need for averaging control, interaction and the need for optimization, respectively. Category F considers not only interactions that require decoupling but also measured disturbances that require feedforward action. The Choice A column offers the first choices for process control solutions. The Choice B column offers the the first choices for online property estimators used to predict a stream composition or product quality online from measurements of flows, densities, pressures, and temperatures.

Table 1

Diehard PID advocates can take solace in columns C for D for processes with severe non-self-regulating or extremely nonlinear responses. Here, high derivative and gain action is essential to deal with the acceleration of the controlled variable from the positive feed back response or increasing process gain. The approach to the steep portion of the titration curve can look like a runaway condition to a pH controller. Fast, pseudo-integrating processes such as furnace pressure, can ramp off scale in a few seconds and require the immediate action from gain and derivative modes of a PID controller6. An adaptive controller can greatly help the tuning of a PID for slowly changing nonlinearities.

MPC is ideally suited for averaging control applications, such as surge tank level control, where tight control is not needed and is more important to not jerk around a manipulated flow. Move suppression, which is the key MPC tuning factor, sets the degree that variability in the controlled variable that is transferred to the manipulated variable.

For interactions and measured disturbances, MPC is able to accurately include the effects of delay and lag times automatically from response testing and model identification. While PID controllers can have dynamic decoupling and feedforward signals, in practice it is done on a steady-state basis for a small number of interactions or disturbances.

The greatest benefits have been achieved from optimization. MPC is the choice here because it can simultaneously move set points to get greater process capacity or efficiency with the knowledge and ability to prevent present and future violation of multiple constraints. The delay and lag times associated with the effect of manipulated and disturbance variables on the constraint variables are automatically included in the optimization, which is not the case for traditional supervisory and advisory control.

Fluidized-Bed Reactor Temperature Control Optimization By Bernard Pelletier, BE, MSAAn advanced control strategy was developed that did not require any software platform and showed exceptional results including a 1.5% increase in throughput, a 1% reduction in energy consumption, and improved conveyor life. As a result, the project's initial investment was returned in less than three months.Unable to AdaptQuebec Iron and Titanium (QIT) produces an enriched titanium dioxide slag in its ilmenite smelting plant inOnline property estimators, also known as "soft or intelligent sensors," have only recently gone mainstream so the table is a "first look" that is sure to change as more experience is gained.

When properly applied, estimators can provide faster, smoother, and more reliable measurements of important process compositions and product quality parameters than analyzers2. An analyzer is needed somewhere to provide the initial training and testing and ongoing feed back correction of estimators. If the analyzer is in the lab, time stamped samples at relatively frequent intervals are essential. For the training and verification of ANN to predict stack emissions, skids of analyzers are rented. These ANN are then accurate for 6 months or more if there are no significant changes in the process or equipment operating conditions. For most process applications, ongoing periodic feedback correction from an online or lab analyzer is needed2. The delay and lag times, which are used in the estimator to time-coordinate the estimator output with an analyzer reading or lab result, are removed to provide a future value for process control.

An ANN inherently has an advantage for nonlinear processes with a large number of inputs that are dominated by delays. However, an ANN should not be used for prediction of values for inputs outside of the training data set because its nonlinear functions are not suitable for extrapolation. For linear processes with a relatively small number of inputs where lag times are more important, a linear dynamic estimator that uses the same type of models identified for MPC has been demonstrated to be effective2.

It can be readily integrated into MPC to take into account interactions. It is important to realize that both an ANN and LDE assume the inputs are uncorrelated. If this is not the case, principal component analysis (PCA) should be used to provide a reduced set of linear independent latent variables as inputs. Multivariate statistical process control (MSPC) software can automatically do PCA and partial least squares estimators. MSPC packages offer the ability to drill down and analyzer the relative contributions of each process measurement to a PCA latent variable and are the best choice for linear processes with a huge number of inputs that are correlated and dominated by time delays2.

Finally, first principle models (FPM) are the estimator of choice for runaway conditions for finding and exploiting new optimums that are beyond the normal process operating range. Correlation of inputs and nonlinearity is a non issue if the equations and parameters are known. Traditionally, FPM has been achieved by real time optimization that employs steady state high fidelity process simulations. More recently, virtual plants that use dynamic high fidelity simulations running faster than real time have been used to provide future values of key concentrations in important streams2. Virtual plants can also play an important role in developing process data that covers a wider range of plant operation for the training of an ANN. Most plant data tends to be at a one operating point unless plant testing or optimization is in progress. Plant operations are conservative especially when customers or the Food and Drug Administration insist on no changes. Virtual plants instill the confidence and provide the documentation to try new process set points and control strategies important to gain competitiveness.

References(1) McMillan, Gregory, "Opportunities in Process Control", Chemical Manufacturer's Association (CMA) white paper, June 1993.