Twenty-five years ago, companies would never trust their hardcore industrial processes to desktop PC hardware and operating systems, or trust them to an online bookseller, search engine startup or light bulb maker. However, business models change, and Amazon has been in the cloud-computing game for more than a decade.

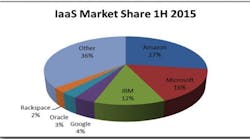

Today, Amazon Web Services (AWS) dominates the Infrastructure as a Service (IaaS) business (Figure 1). Its annual revenues hover at roughly $7 billion, which is far ahead of IT mainstays IBM and Oracle, and competes with second-place Microsoft Azure, Google Cloud and now, GE’s related Predix offering.

A few years ago, control engineers would have found it unthinkable for a corporate IT analyst to check in on the performance of a valve or pressure transmitter—much less bypass the plant historian, DCS and control network. Today, this is just the kind of chasm-crossing enabled by the Industrial Internet of Things (IIoT).

But it’s not easy. “The process industries have some particular challenges, requiring individual IoT solutions,” says Tanja Rueckert, executive vice president of IoT and customer innovation at SAP. She acknowledges the “very heterogeneous, long-living assets that may not always be instrumented to modern standards,” which can benefit from “IoT solutions” with broad ranging benefits to operations, maintenance, energy consumption, yield and quality.

Serving up infrastructure

Figure 1: Infrastructure as a service (IaaS) let users set up virtual servers and storage in a provider's data center. The largest of these include Amazon, Microsoft and IBM, according to Statista's report at www.statista.com/topics/1695/cloud-computing/. [GRAPHIC CREDIT] Statista

Challenges in the field

So what are the key challenges to hasten IIoT adoption in process plants? Cybersecurity compliance (with ICS-CERT, etc.), aging plant assets and an aging IIoT-unaware workforce are among the greatest of them. Add to that the many “disparate data sources that can't properly be integrated and analyzed for insights into operating conditions or business opportunities,” says Todd Gardner, vice president of Siemens Industry Inc.'s Process Automation Business. “And, perhaps above all, a general tendency to not yet recognize the full potential that lies waiting to be exploited in a digital enterprise."

Gardner adds, "Process manufacturers are already experiencing the pains of evolving digitalization. One of the greatest challenges is for manufacturers to become resolved to modernize and more fully integrate their automation environment. Making such a decision also immediately helps with a second great challenge, the growing threat of cyber-attack. In fiscal year 2015, ICS-CERT responded to a record 97 incidents in the Critical Manufacturing sector, nearly doubling the 2014 number and becoming the incident leading sector for ICS-CERT. Another 71 incidents were reported between the Energy sector and Water and Wastewater Systems sector.

"It's those same islands of automation, coupled with an antiquated, modular security concept, that makes too many plants soft targets today, despite many expensive and complicated countermeasures being put into place. Again, the way out of that box is to modernize and integrate the automation environment, with security built natively into the system from the field level up."

Connectivity, computing, power and cloud

Of course, Greg Conary, senior vice president of strategy at Schneider Electric was right when he blogged last year, “The Industrial Internet of Things is an evolution, not a revolution.”

Revolution or not, Dan Miklovic, principal analyst with LNS Research, calls IIoT “disruptive” in the best sense, and a “perfect storm” of connectivity, computing power and cloud capabilities. But, “The beauty of IIoT is that you don’t have to rip and replace," adds Miklovic. "You can do it as a phased implementation, and start with the low-hanging fruit. When a device fails, or when it’s time to upgrade a system, newer-generation replacement alternatives are already in the marketplace.

"IoT makes both the data and computing power readily accessible to anyone anywhere at any time. This, despite the fact that IoT still has some security and other issues to resolve so some unauthorized user on a smartphone in Poughkeepsie, N.Y., doesn’t shut down a nuclear reactor in San Bernadino, Calif. These are being ironed out. Plant databases are now converting to ‘data lakes in the cloud.’ Even if the data aren’t hosted in the cloud, it’s accessible to the cloud you want to deploy such as Predix, Amazon, Microsoft, Google or others as long as that data has the right descriptive metadata."

Process control and similar applications now use all manner of SQL and OPC tools to connect to the plant databases; field personnel can now link directly to plant historians; and even analog instruments can talk more easily to other systems, as in the case of WirelessHART with HART-based Internet protocol (IP), which connects to a plant's Ethernet TCP/IP infrastructure. People think of IIoT as “passing massive amounts of data, but the real next big thing is about passing small amounts of data from massive amounts of devices,” says Bob Karschnia, vice president of wireless at Emerson Process Management, which has long championed the mantra of “pervasive sensing.”

Bridging OT and IT

If IP-based communications become more standard in process instruments, the operations technology/information technology (OT/IT) architectural bureaucracy could flatten, and hasten the ability of big data and cloud-based analytics to span the sensor to supply chain with new immediacy. Milkovic envisions IP-enabled instrumentation gaining the ability to connect directly to enterprise resource planning (ERP) systems to report, for instance, on the status of consumables without the signal going through PLCs or supervisory applications.

What of the OT/IT wall? OT and IT are already closer than kissing cousins, with only human judgment standing in the way.

Miklovic explains, “One man’s OT is another man’s IT” from industry to industry. “If you’re a bank, IT is your OT; if you’re a manufacturer, your financial systems are clearly IT, and the PLCs on your floor are pretty clearly OT. But what’s MES? What is a computerized maintenance management system? What’s a quality system that does real-time measurements on the shop floor? I’ve talked to different plants in the same company, and some might call them IT, while others call them OT.”

Cemex shows OT/IT integration

The news was building last year when cement and building materials supplier Cemex went public with its data analytics success of integrating PI Historian software from OSIsoft and SAP's Hana, which is its in-memory system—as in RAM-based—for real-time, ad hoc analysis for large volumes of data. In January, SAP reported it would sell its Hana IoT connector by OSIsoft, and OSIsoft would conversely sell its SAP Hana IoT integrator.

Traditionally, it’s been a challenge to query plant databases, as in “process, cleanse and filter the data, so it can be used in advanced analytics tools,” says Dominic John, vice president of industry and marketing for OSIsoft. Now users can move data from PI to Hana to “explore and answer the questions they need” with no help from IT.

Back at Cemex, a typical data extraction across 70-sites used to take approximately 740 hours, but “when we went to PI integrator? One hour. Actually it’s less—five minutes or even one minute. It's that good,” says Cemex user Rodrigo Quinero, who spoke to attendees at last year’s OSIsoft European user conference.

Think global, buy into local

Way beneath the promise of everything-connected, always-on connections, what corporate IT might see as a minuscule trickle of field device data is actually a tsunami of data analogous to Twitter's “tweets” by tomorrow’s process-industry data clouds.

“At some point, you need to realize that underlying all of this, there’s somebody in the field wearing coveralls and a hard hat and twisting a screwdriver,” says Dennis Nash, president and CEO of Control Station. Its control loop performance-monitoring application, PlantESP, which Rockwell announced will soon be offered in its PlantPAX DCS, provides dynamic data modeling for dozens or hundreds of daily setpoint and output changes at plants to uncover opportunities for better, cost-saving tunings. Its browser-based intranet GUI lets users from the field to the corporate office track, compare and contrast processing units to “identify your top 10% performers and bottom 10% performers, and manage your business more effectively.”

For fast-moving processes, Nash recommends hosting data super-locally in an optional micro-historian, which sheds old data in a first-in, first-out (FIFO) manner, because plant databases may be limited to saving data at 10-minute intervals. This could work for some processes, but not well enough for a refinery with 10,000 loops and constantly-changing process conditions, according to Nash. In some situations, data has to be local.

"When an engineer at his desk says, ‘I need more data so that I can understand what’s going on in my process!’ Then the IT group can respond, ‘What do you want, a new laptop or another terabyte of storage?'"

Browser-based analytics

That need could change as engineers continue to use apps locally, while at the same time cross-pollinating their analyses with data based in IT systems. For example, an engineer using the browser-based process analytics Workbench from Seeq can manipulate and compare time-series data from local databases and process databases—such as those from OSIsoft, Emerson, Honeywell, Wonderware and others—and now incorporate data from IT's Hadoop distributed file system or data from Microsoft Azure or other cloud service.

Another aspect of IIoT is a “no SQL” database that works like general services, which are “the storage engines that power FaceBook and LinkedIn” to store data and query relationships, explains Michael Risse, vice president at Seeq. He also cites some data-inspired software engineering that's analogous to how Google searches use MapReduce or the way Deep Learning powers Siri voice commands on the Apple iPhone.

When engineers “frequently cross over from OT to IT datasets,” Risse says, it can improve production results for quality, margins and more. He cites examples that include engineers doing “investigations into batch quality with respect to ingredient suppliers, asset mean time between failures (MTBF) in the context of service records, or profitability based on recognized sales price.”

To avoid using SQL, Seeq uses “a property graph database geared toward querying relationships across nodes to work with data and relationships between data in objects called 'capsules' that store 'time periods of interest' and related data used to compare machine and process states, save data annotations, enable calculations, and perform many other tasks,” Risse says, turning them into “intuitive data objects used to manipulate and compare time ranges, and their scalability, simplicity, and storage capabilities. They require no big data or other programming skills, or even awareness of big data and other underlying platforms.”

GE collects all, stores all

Meanwhile, General Electric’s Brilliant Manufacturing strategy, now being rolled out at the company’s manufacturing sites and in its automation software, prescribes a “collect it all, store it all” approach to databases, says Rich Carpenter, chief of strategy for GE's Automation and Controls. Over time, he says, plant data rolled upward into corporate-level process analytics is needed to uncover hidden opportunities for improvement.

"We may have 1,000 units of the same type with repeated failure scenarios and corrective actions captured in a cloud environment,” says Carpenter. However, he adds that monitoring and predictive analytics inside GE’s manufacturing software, now packaged into the division’s SmartSignal offering, can detect and diagnose similar patterns, and address them “automatically, continuously and relentlessly, and do it 24/7 for optimized asset performance management.”

This is possible because GE's data scientists constantly pore over historical data to find new patterns among assets “we didn’t know existed, and correlate them to events that we want to avoid in order to optimize our processes.” The dataset, he adds, goes beyond manufacturing equipment to include business, economic and even climate trends that can influence operations.

Additionally, GE’s recent shedding of its financial services and NBC-TV divisions has freed dollars for all manner of projects, including the $1 billion-plus investment that led to its Predix cloud-based, platform-as-a-service (PaaS) offering. It promises out-of-the-box IIoT data center connectivity, app development and hosting—starting with its own Proficy Historian and SmartSignal analytics apps, as well as a secure platform for global process, energy, quality and maintenance management.

— Bob Sperber is a frequent contributor to Control.