Process Automation Programming Language. One for All

William M. Hawkins is a member of Control's Process Automation Hall of Fame (2008) and author of the recently published book, Automating Manufacturing Operations: The Penultimate Approach, Momentum Press, 2013.

The problems associated with opaque automation are already with us. The most common way to obscure a process is with a flood of alarms, so good work is being done in the alarm management field. Early fly-by-wire aircraft flew into terrain when the pilots were unable to correct what the automation was doing because they didn't understand what was going on. The investigators blamed the crashes on opaque automation.

A continuous process doesn't have much automation aside from its alarms and interlocks. The emphasis is on making the measurements of the state of the process available to operators, while those measurements are held to setpoints by controllers. This is changing as ways to apply procedural control are being developed. It's not that continuous processes don't have procedures—the standard operating procedures manual for a process is full of them. Only recently has the procedural control developed for batch processes been considered for use with continuous processes.

Also Read: Open Control Programming at Last?

Automating process procedures can easily lead to opaque automation when the procedure can go in different directions, depending on alarms and changes in the process. An operator who has no idea what will happen next has a limited ability to keep the process out of trouble. The process equivalent of flight into terrain often leads to a fire and possible explosions in a chemical process. Discrete processes suffer loss of production when part of a machine breaks, and the debris falls into a gearbox.

The principle reason why procedures become opaque is that they're designed by clever (not to say overly inventive) engineers and programmers, who are focused on getting the job done without considering the need for a clear human interface. The result is inscrutable logic encoded into procedures that are not readable by ordinary mortals, assuming that most people don't know a database from first base.

Change is required in the way that procedures are designed, but people don't want to change if what they have works. They have to be shown that what they think works really isn't working, and that can get expensive. Some towns don't install a traffic light until someone is killed at the intersection.

One reason that procedures are encoded is efficiency of computation. Years ago, programmers resorted to assembly language programs to save expensive memory and speed up slow applications. Today's computers have several orders of magnitude more memory and speed. Tomorrow's computers could make today's look ridiculous, especially if quantum weirdness can be tamed. Efficiency of computation is not an issue with automation-scale applications.

Another factor that complicates procedures is the number of translations that must occur between the user's requirements and the functioning machine. An engineer must understand what the user wants, which requires an engineer who has worked as a user. A programmer must understand what the engineer wants, but it is more difficult to find programmers who understand anything but getting code to work in a computer. The problem must be reduced to mathematics and branching tests.

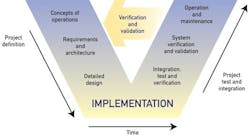

The U.S. FDA requires a paper-laden trail through the V-model (Figure 1) to assure that software will do what the user required. Users aren't always good at defining exactly what they want ("I'll know it when I see it"), which leads to multiple iterations of the V-model until the result looks like what the user wants. However, that may change when the user tries to use it, and discovers flaws in the human interface.

Figure 1: In the V-model software development process, the horizontal and vertical axes represents time or project completeness (left-to-right) and level of abstraction (coarsest-grain abstraction uppermost), respectively. Federal Highway Administration (FHWA), 2005

The situation today is not unlike the early days of telegraphy when a user drafted a message and took it to a telegraph office. A telegrapher, who knew how to get the message to its destination, translated it to Morse code. The receiving telegrapher decoded it, and gave it to a messenger who took it to the recipient. Then the telephone was invented, and most of that structure went away. The sender could deliver the message to the receiver directly. Well, "directly" if you don't count wiring, switching, trunk lines and central offices that made it possible. Obsolete Pony Express riders could say "I told you so" to the telegraphers.

Natural Language Should Be the Norm

First, it's necessary to stop thinking of controlled process equipment as computer peripherals. Process equipment is designed and purchased to provide process functions, such as mixing, distilling, heating, machining, assembly and packaging. The equipment is inert until it's controlled by a human, or automated by a computer or other machine.

[pullquote]Now it's possible to think of a set of pieces of process equipment as a human that's been automated by a computer, sort of like a robot. Consider the control computer in such a set to be a "worker" that communicates with a "supervisor." This is, of course, the way things were done in those halcyon days before computers.

The control for a set of equipment must be designed to accept commands from a supervisor, to reply with status, and to initiate communication for alarms and events. The commands, status and alarms are all related to the function of the equipment. They have no relation to the product being made. These concepts were laid out by ISA-88 in 1995, although not in these words.

The equipment controller can reduce the number of alarms from the equipment if it knows the state of the process, or it can just report a red, yellow or green status. If the status isn't green, the operator must examine detailed displays to find out why the equipment is not functioning correctly. If the equipment knows that it's not being used, then it can suppress the alarms that are associated with normal functions.

Given process functions with these capabilities, procedures for a "supervisor" can be written in terms of instructing the equipment, interpreting its status, and responding to exceptions (alarms and alerts). All that remains is to define the language to be used for communication between "supervisors" and "workers." Perhaps you've seen the cartoon of a physics professor at his chalkboard with an incomprehensible series of terms in an equation followed by a balloon containing the words, "Now a miracle occurs."

Natural languages and language processors can be developed, but the users will have to drive that development. System vendors profit from all of that translation and opacity.

If the equipment functions are given names like Bob and Ray, then a "supervisor" running a procedure can ask Bob for its status. If it's OK, ask Bob to set up its function with specific setpoints. If there are no exceptions from Bob, tell it to start its function and report back when done. Meanwhile, deal with exceptions as they occur. At the same time, Ray (and others) can be told to do something else, which may be conditional on what Bob is doing. Bob and Ray may even need to talk to each other.

The trick is that all of these "conversations" are done in human-readable natural language. A human interface must make it possible to see where the process is in the procedure and what might happen next. If the "supervisor" predicts that it's losing control of the process, it can request that the operator take control. The operator can mash the shutdown button or talk directly to Bob and Ray to resolve the problem. Control can then be returned to the "supervisor" at a point in the procedure where it's safe to resume.

If the idea of human-readable procedures and control conversations seems impossible, take out your smartphone and ask its assistant.

[For more, see the book, Automating Manufacturing Operations, the Penultimate Approach, William M. Hawkins, Momentum Press, 2013.]

Leaders relevant to this article: