We control the planet, not because humans are the strongest, fastest or biggest creatures, but because—as of yet—we’re the smartest of all God's creations. The totality of our mental processes allowed us to best adapt to our environment. This was the case since the end of the last ice age, but it is ending because of our own activity. This ending does not endanger nature, but could endanger us. This new chapter in our evolution was opened by automation, which delegated some of our muscle and brain functions to machines.

Human or "normal intelligence" is the totality of all mental process to acquire and apply knowledge. When we delegate some of that intelligence to machines, they acquire artificial intelligence (AI). In the very beginning of the age of automation, this delegated intelligence was usually limited to single tasks, such as controlling the room temperature by a thermostat or refilling a toilet tank to the right level. Alexander Cumming invented the flush toilet less than 250 years ago—just imagine where we will be in another 250 years.

During the past couple of centuries, the science of process control has evolved to the point where today we can measure just about anything, we can develop algorithms to evaluate just about any number of measurements and initiate any variety of actions in response to what we have detected. (Stephen Hawking, who lost all his body functions except the working of his brain and one muscle on his cheek, explained the beginning of time and the universe by stopping a moving cursor on a computer screen using an infrared sensor to detect the movement of that muscle.) It was only 75 years ago that John von Neumann proved that the computer could perform all calculations. This tool in our "toolbox" provided practically unlimited memory capacity and immense speed to implement even the most complex process control algorithms.

Today, this knowledge is moving out into daily life. Robots and instant communication are making our lives even easier, but when these machines acquire AI, we will be moving onto a slippery slope that leads to an unknown territory. The ability to make thousands of complex decisions in a millisecond could both help or harm. In any case, the computer started a new age by introducing a new, artificial factor into our real environment and adapting to this new factor will influence our own evolution.

When we designed the first gadgets that made industry safer and more efficient, we didn’t realize where this will lead. Next, we designed smart instruments that were able to self-diagnose and request their own recalibration or maintenance. This road eventually led to robots, and today, we are beginning to realize that these human creations can be more than mechanical slaves. In fact, we are just beginning to realize that these robots could outsmart us and we might reach the age when we no longer are the smartest creations, as they possibly could take control from us? (Figure 1).

Figure 1: Do we understand what we are creating? (Source: https://yearofai.wordpress.com/2017/01/01/the-year-of-artificial-intelligence-is-here)

Who controls AI?

AI’s impact will depend on how we control it. In previous control applications, we were always able to determine—or at least estimate—the consequences of what we were doing, but in the case of AI, we cannot. AI is a "dual-use technology," meaning that it’s capable of doing both harm and good. Facebook or the government's ability to know where each of our cellphones are should be both a hint and a warning that smart machines could turn against us.

This is what worries some smart people, including Hawking, Gates and Musk, because in their view, a hyper-intelligent machine could decide against supporting the continued existence of human civilization, through tools like brainwashing or misinformation. Therefore, safeguards are needed to make sure that super-intelligent machines don’t get out of control. Yet, some of our best brains (at SAP, DeepMind, Alphabet, Facebook, Google, Fujitsu, etc.) don’t care about that and are moving full speed ahead with the development of super-intelligent and deep learning AI, without caring about safety.

Some might argue that we shouldn’t worry about the consequences of AI because it can’t be any worst than the consequences of what we are already doing.

They argue that when we allow the existence of nuclear weapons, climate change and attacks on democracy's institutions—which took 2,500 years to refine since the Greeks invented it—then the quality of our present intelligence is also debatable. These people are wrong, because process control teaches us that there is a big difference between not caring to control a process and not being able to control it. Today, we could still correct the above-listed problems, while Hawking and others believe that if super AI enters our cultural environment, we might no longer be able to do so.

Neural networks

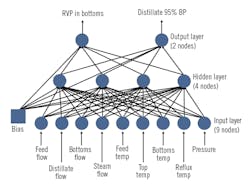

In industrial controls, we have long been using neural networks to control our multivariable processes. For example, we predicted the physical properties of distillates on the basis of about a dozen measurement inputs and a back-propagation neural network having three layers (one hidden) of nodes and links, each having different gains and biases (Figure 2).

In our brain, there are billions and billions of neurons, arranged in depths of six, seven or even more layers and connected by many billions of nerves, each having its own gain and bias. The nerves establish electrical connections between the neurons, but we don't yet know exactly how all of these connections add up to higher reasoning, because as of yet this complex circuitry is incomprehensible.

We are already capable of building a four-hidden-layer neuron network that can self-learn by a process similar to how children learn. Smart cars, for example, are beginning to have some deep learning capabilities. Hyper-AI systems with six hidden layers and a feedforward network can already evaluate the causal chain that is required to "learn" (build) a seventh link of its neural network.

Figure 2: It takes only one hidden layer to predict physical properties of a process. Humans use six or more. Artificial intelligence is now using four.

Where are we today?

It’s beyond me to judge the future effects of AI, or to question the views of people like Hawking, but I can make a few comments based on what we have learned during the past century by automatically controlling industrial processes.

When robots were introduced, they were welcomed because they are strong and can do things that are boring or because they are more accurate and faster than humans. Later, we realized that they can also go to places that are unhospitable, such as the Mars or to war zones. But now, we are beginning to realize, that AI can also change our lifestyle. It seems that our AI-brained robots can not only build cars, but can also drive them, and who knows, one day, they might even be able to design better ones. Once the robots can design, build and drive cars, will our role be just to sit in them? Will we be necessary? If we reach the age when all we’re doing is clicking buttons on our super-phones, will we, the homo sapiens, devolve into the "homo clikster"?

Is this true? Is this good? We don’t know the answer, but we do know that it’s advisable to ask the question: Can this human creation eventually gain superhuman intelligence and if it does, can it turn against its creator or make its creator unnecessary?

If I learned anything in process control, it’s that before controlling any process, we must fully understand it. Yet, we don’t understand the consequences of AI. What we do know is that machines have no consciousness, ethics or morality. Therefore, the human-robot relationship of the future must resemble a cascade loop, where humans are the master controllers and robots are the slaves. In other words, we should figure out how to merge these machines with our brains so that we maintain control of them.

This concern might sound far-fetched, but we have also learned that accelerating processes must be controlled as soon as they begin, and AI evolution is an extremely accelerating process. It took 75 years from the invention of the computer to get to where we are and if we don’t start controlling it now, the AI explosion might become uncontrollable. Today, the "bad guys” could already turn our nuclear power plants into atomic bombs, sink unmanned oil drilling platforms or undermine the very foundations of our democracy.

Hawking and others believe that AI can become an omnipotent super-intelligence and obtain the ability to psychologically manipulate people. This they consider dangerous, because machines lack human-like ethics and morality. I’m not sure if their warnings are valid, but I am sure that today, the evolution of AI is uncontrolled. This puts us on a road on a slippery slope, and we don’t know where it leads.