We're comfortable using regression models based on a power series such as y = a + bx + cx² +... Here, the model structure is the sum of power-series terms and the a, b, c coefficients are linear in the model (given an x-value, the calculated y-value is linearly proportional to the coefficient value). We get the model coefficient values by best matching the model to the data; and with this structure, linear regression is easy. Simple algebra is used to calculate the steady-state y from steady x conditions. If a quadratic model doesn't seem to have a good fit to the data, try a cubic model. We admit the model isn't the truth about the x-to-y relation, and that it takes human judgment to balance goodness-of-fit against model complexity. Data could be generated designing experiment protocols, or it could be acquired from historical trends.

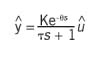

We also accept first-order-plus-dead-time (FOPDT) models of a transient response, which are often presented in Laplace transform notation such as:

Here, the model structure represents a first-order lag after a delay, and the modeled y-value isn't linearly dependent on the coefficients of τ or θ. We don’t solve for the y-value in that Laplace notation, but use numerical methods to solve the differential equation it represents. Again, we adjust model coefficient values to best match model to the data; but here, we need to use nonlinear regression. If you want a better fit to the data, perhaps use a second-order model (SOPDT), or schedule the model gain, time-constant or delay. Data might be generated by the reaction curve method—a single step-and-hold in the measured variable (MV) and observation of the transient controlled variable (CV) response.

Finite impulse response (FIR) models of transient behavior are common in model-based control. Use a pseudo-random binary sequence to make sequential changes in the MV, observe the CV, and adjust impulse heights in the model to best fit. Use human judgement to specify the vector length, sampling interval and model inputs that provide the desired balance of fit to complexity. Linear algebra techniques are used to solve the matrix-vector models.

Those three modeling approaches are unique; each has its own mathematical form, solution procedure, protocol for generating data, regression algorithm, and criteria for goodness. A user wouldn't use a steady-state model to try to match a transient response. A user can't use methods for one to make another generate a right answer. If the match is not good enough, the user chooses what additional complexity to include in the model.

AI underutilized

There are many other modeling approaches in the category of artificial intelligence (AI). They use modeling forms that are realizable with modern computing tools. AI techniques offer improved flexibility as modeling tools, but I believe they're underutilized.

My experience is that industrial managers have three objections to any form of AI. First, there was a view that came from those who attempted to implement neural networks (NN) or fuzzy logic (FL) as young engineers, after being snookered by the promotional hyperbole of those selling the new tools. However, because techniques and methods were unfamiliar, or the promise was exaggerated, they couldn't get it to work. And, rather than admit "I was inadequate to get it to work,” they claimed "It doesn't work." They kept this view as they eventually got promoted to managerial positions, and many years later blocked FL and NN applications based on prior notions.

Second, there's the adverse reaction to names from the research/science community that created AI approaches. Fuzzy logic sounds like faulty thinking. Artificial intelligence sounds like an independent sentient presence with a possibly adverse agenda that can't be managed by humans (Consider HAL from the movie 2001: A Space Odyssey.) And a neural network sounds like a mysterious brain-like thinking method of calculation. Instead of the term regression to mean fitting model to data, they use anthropomorphic terms such as learning, training or memorization, making AI seem to be a form of intelligence. By contrast, FL is just a way to implement human logic, and it's not faulty. In spite of the hyperbole of researchers and movie producers, there's no sentient presence behind an AI computation. Neural networks are not unknowable. The code and computation is as simple as any regression modeling that we use in multivariable statistics or model-predictive control.

The third manager objection I’ve encountered is a fear of the unfamiliar. How can one be confident that the models (understanding) are complete and comprehensive—and reliable? How can one be sure that AI is properly adapting to a new situation and understands its context? Managers are in ‘responsible charge,’ and have the same fear about strange techniques as they do about how new engineers assess situations and make recommendations. Interestingly, the same questions can be cast at any modeling approach, but practice has made us comfortable with classic modeling approaches. I now think we have substantial application evidence that AI is as safe and legitimate as any modeling approach.

So develop your skills and consider NNs when traditional regression models don’t give desired results. Consider FL to automate human decision-making. First, understand the techniques and associated methods to develop the models. Use procedures designed for the technique, not for an alternate modeling approach.

As a message to vendors, I think it's important to not let excitement about new techniques lead to statements that make practitioners think it is either a cure-all or ready for an untrained person to apply. Vendors need to provide details of the methods, and complete instructions to users about what to do and not do. I think vendors shouldn't use the anthropomorphic terminology of the researchers. They should return to the familiar terminology of the users.

Recommended Reading

- Rhinehart, R. R., Instrument Engineers' Handbook, Vol III, Process Software and Digital Networks, 4th Edition, B. Liptak & H. Eren Editors, Section 2.13, "Neural Network Applications in Process Automation," Taylor and Francis, CRC Press, Boca Raton, FL, 2011

- Rhinehart, R. R., Instrument Engineers' Handbook, Vol III, Process Software and Digital Networks, 4th Edition, B. Liptak & H. Eren Editors, Section 2.14, " Fuzzy Logic Applications in Process Automation," Taylor and Francis, CRC Press, Boca Raton, FL, 2011