How to survive the oncoming train of technology

This year marks the 30th anniversary of the Distributed Control System, or DCS. The development of the DCS closely mirrors that of process automation itself, moving from proprietary technologies and closed systems to commercial-off-the-shelf (COTS) components, industry standard field networks, and Windows operating systems. But the most important transformation was from a system focus to a focus on business processes and achieving operational excellence (OE) in process plants. The control engineer has been dragged into the world of manufacturing.

The DCS was introduced in 1975 by both Honeywell and Yokogawa, who had each produced their own independently designed products, the TDC2000 and the CENTUM systems. This marked the dawn of the system-centric era of the 1970s. Central to the DCS model was the inclusion of function blocks, which continue to be the fundamental building block of control for DCS suppliers, and fieldbus technologies.

In the 1980s, users began to look at DCSEs as more than just basic process control. Suppliers began to adopt Ethernet-based networks, with their own proprietary protocol layers. The 1980s witnessed the first PLCs integrated into the DCS infrastructure, as companies such as Rockwell, Siemens, Schneider and others entered the DCS market. The 1980s also witnessed the beginning of the Fieldbus Wars, continuing to this day.

From the 1980s and 1990s forward, suppliers discovered that the hardware market was shrinking, commoditizing, and becoming saturated. Larger suppliers found themselves competing with second tier suppliers, including some whose DCS emulation software ran completely on COTS products, like Wonderware, Iconics, Citect and others. The key large suppliers began to make the transition to a software and value-added service business model.

Just as it became apparent that the simple application of automation to manufacturing processes was not delivering the return on investment that it once did, suppliers began to offer new applications such as production management, model-based control, real-time optimization, plant asset management and real time performance management tools such as alarm management, predictive maintenance, and many others. In addition, suppliers began to offer the consulting services necessary to make all these new applications work.f

In the 2000s, weve seen a dramatic shift from system-centric and technology oriented approaches to a business-centric approach. Users now look at the overall business value proposition, with elements such as asset utilization, return on assets, and increased plant performance coming to the forefront. Technology for technologys sake is no longer an effective argument for justification of automation products. Again, the control engineer is being dragged into the world of manufacturing.

What Time Is On Your Watch?

You can think of what has happened to our profession this way: In the early days, we were all concerned with how to build the watch. Knowing how to build the watch was critical to success.

Later, it became imperative to also know how to tell time. So your skill set expanded. Later yet, we realized that it wasnt enough to be able to know how to build the watch and tell time. Now we needed to know what the benefits of being on time were, and the consequences of being late. So the skill set expanded once again.

That is where lots of process automation professionals are: we know how sensors work, we know how loops work, and we know how to control a process.

Unfortunately, the required skill set has expanded once again. Now it isnt enough to be able to build the watch, tell time, know what happens when youre late, and why you should be on time. Now we have to be experts on scheduling, because everything below that has become transparent.

Our primary value now is seeing to it that information from the plant is transmitted to the enterprise. You see, what has happened to process automation is that it is now working in fourth order concepts. Many of you arent there yet, and some of the new technologies arent quite there yet either. If you dont get there, and soon, that train will flatten you like a penny on the track.

Disruptive Technologies R Us

We cover these disruptive technologies regularly, so we will just list them here:

- Collaborative engineering

- Integrated simulation and design/draw software

- Remote server applications, XML, B2MML and web services

- HMI and human factors engineering

- Automatic Identification and Data Capture (AIDC) technologies like RFID

- Robust wireless systems for monitoring and control

- Mobile computing

- More and better online analysis systems

- Smart sensors and final control elements

- Real-time performance management

- Real-time asset management

Looking a great deal like George Jetsons control room, this is a three-dimensional display currently working in an ExxonMobil facility. Source: 3D-Perception AS

Not only is there a shortage of competent process automation professionals, both in the end user ranks and in the ranks of vendors and vendor service groups as Dave Beckman pointed out, there is a significant need for advanced process automation training. WBF, ISA and other organizations have highly developed training courses aimed directly at the process automation professional, many covering the disruptive technologies weve identified. Yet, as our July Control Poll indicates, over 45% of you wont pay for training that your employer doesnt provide. It isnt a good bet that more training facilities will become available if this trend continues. And it is a good bet that, if you dont get training on these new trends, you will be out on your ear, regardless of the shortage.How to Survive the Oncoming TrainOur Inescapable Data vision suggests that it is not just advances in each of these technologies, it is the combination of these fundamental elements that will break barriers and magnify gains to levels not yet anticipated. We think these new combinations will lead to an explosion of benefits driving both higher personal and economic satisfaction. This is from a new book, Inescapable Data: Harnessing the Power of Convergence out of IBM Press, by Chris Stakutis and John Webster. Stakutis is the IBMer, CTO for emerging storage software. Webster is founder and Senior Analyst for Data Mobility Group, a TLA consultant based in Boston. Their thesis is that the convergence of technologies and ubiquity of data will drive a revolution. Applying this to process automation, we get the vision of tomorrows plant weve given you. If you want to live long and prosper in this new world of inescapable data, you will need, as Rich Merritt encourages in his column, to get your mind right about technology, and start thinking in fourth order terms. Remember, nobody cares how the watch is built, or how time is kept.

What If? Virtual Plant Reality

By Gregory K. McMillan, CONTROL columnistTHE CHEMICAL industry has had the software since the turn of the century to create a virtual plant where the actual configuration of DCS resides in a personal computer with a dynamic simulation of the process that has evolved to be a graphically configured process and instrument diagram (P&ID). At the same time, the retirement of experienced engineers and technicians at the plant, the disengagement between the operator and process from the use of advanced control, and world wide competition has created a greater than ever need for training and optimization. Potentially, the virtual plant can go where the real plant hasnt gone for good or bad reasons and replace myth with solid evidence and engineering principles.|

Why are process simulators so far behind flight simulators? |

Flight simulators can focus on the servo response of hydraulic controls for well-defined components in air or the ions in space, whereas chemical processes have significant dead times, pneumatic actuators, and thousands of poorly defined compounds. The physical properties (e.g., density, mass heat capacity, and boiling point) as a function of composition, pressure, and temperature of mixtures are missing. Often hypothetical compounds must be created and the simulation obligated to estimate the relationships. Just try to find hydrochloric acid and sodium hydroxide in a physical property package. If you combine this data problem with the uncertainties, disturbances, nonlinearities and slowness of the process, control valves and sensors, you are set up for a failure. (Click the Download Now button at the bottom of this article for a .pdf version of this chart.)

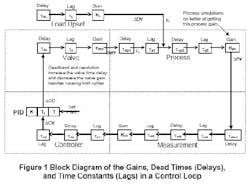

IF YOU LOOK at the block diagram in Figure 1 of a control loop (above), good physical property data and a high fidelity simulator can yield an accurate process output for a process input at steady state. When process simulators are developed by process engineers for process design, this is the beginning and end of quest. You have a design point and the information you need for a process flow diagram (PFD). However, control engineers want to know the time response of the process variables to changes in controller outputs and disturbances. The first order plus dead time response for the loop in manual (open loop) is the simplest way of representing the dynamic response and can be used for the tuning of PID controllers or the set up of model predictive control. The overall open loop gain is the product of the valve, process and measurement gains; the total loop dead time is the sum of the pure dead times (time delay) and small time constants (time lags); and the open loop time constant is the largest time constant in the loop, wherever it exists.Process simulators typically dont address mixing and transportation in delays in the response of the process and the sensor. They also dont include lags or delays for different types of sensors or actuators, or the installed characteristic, deadband (backlash), and resolution (stick-slip) for various control valve and positioner designs. The thermal lags of coils and jackets may not even be simulated and non-equilibrium conditions are ignored. In other words, the parameters most high fidelity process simulators focus on are the gain and primary time constant of a volume when the continuous process is up to speed. Process simulators may not even get the process gain right because the manipulated variable used by the process engineer might be a heating or cooling rate, which neglects the dynamics associated with utility system design and interactions. The vapor is also assumed to be instantaneously in equilibrium with the liquid by means of a flash calculation. A dynamic process simulator often shows the temperature response of a perfectly mixed volume to an instantaneous change in heat input or removal rate for equilibrium conditions, which for columns and evaporators is after the mixture reaches its boiling point. Wouldnt it be grand if real plants started up or responded this rapidly and ideally? Wouldnt it be super if the severe discontinuities of split range valves and the lags and upsets from fighting utility streams were just a bad dream? Taking Control

Control engineers who understand the importance of dead time and loop dynamics need to take the lead in the development of the models in dynamic simulations. A new definition of model fidelity is needed that emphasizes the dynamic response of a change in process variables to changes in manipulated or disturbance variables. It should cover unusual and non steady operating conditions during startup and batch sequencing, even though this may mean a sacrifice in the match between a given process input and output at design conditions. If we define and seek fidelity in terms of what a control system and operator sees and has to deal with, the promise of the virtual plant can be fulfilled.(Click the Download Now button below for a .pdf version of the chart referred to in this article.)