The fruit of accelerating analytics is plain to see in the gains achieved by many recent process applications. For instance, 90-year-old Monarch Cement Co. in Humboldt, Kan., started its latest analytics journey in 2017 by upgrading with ABB's Knowledge Manager data coordinator, Version 9.0, with ABB Ability System 800xA controls, ABB Laboratory Information Management System (LIMS), and AC 800 M controllers. These control and LIMS upgrades allowed Monarch to improve its collection, consolidation, and distribution of production, product quality and energy consumption data, and improve data mining and sharing because LIMS is closely integrated with Knowledge Manager. This lets operators access all lab information directly from their System 800xA dashboards by right-clicking on any displayed production object.

“My main interest was in the LIMS add-on,” says Mitchell King, assistant quality control and environmental supervisor at Monarch. “On the quality side, we didn’t have a LIMS prior to this. It was all paper logs, and we used 800xA's operating system to feed some quality data into the production line. Now, since we’re feeding data into our production line, marrying production data and quality data with LIMS was a natural fit with 800xA.”

William Peters, automation supervisor at Monarch adds: "We can integrate our laboratory data with our production data, and that’s huge. We use features like the downtime report, so we can go back and do root-cause analyses."

The company produces 1.3 million tons per year of Portland and bagged masonry cement for the midwestern U.S. at its single plant in Humboldt, where it runs operates a 260 tons per hour Pfeiffer roller mill, which uses heat from the company’s two precalciner kilns for drying. The mill employs three 31-ton rollers, positioned vertically and tangentially to the grinding table, plus an additional 1,500 psi of hydraulic pressure to crush the limestone. Finish milling is performed by five ball mills (Figure 1). To maintain quality, Monarch's technicians perform bi-hourly tests on raw materials, clinker and finished cement with an ARL 9900 total cement analyzer and other methods, including Blaine testing and 325 mesh percent passing to measure fineness, sulfur content, and free lime to make sure the clinker is completely reacted. The company collects information data from thousands of data points in its production process, and uses classic wet chemistry methods to determine loss on ignition, insoluble residue, free lime, and solid fuel and water analyses.

As a data coordinator, Knowledge Manager 9.0 pulls together information from System 800xA such as control system equipment health and status reports, production stops, alarm statistics and KPIs, as well as laboratory results, manual entries, production information, SAP ERP systems, energy and emissions monitoring data, and other sources to give operators a one pane-of-glass view of everything happening in Monarch's production process at any moment. Because Knowledge Manager and LIMS are fully integrated, laboratory results are fed directly System 800xA's database to maximize product control uniformity and improve production.

“LIMS and Knowledge Manager make our data more accessible to everyone controlling our process,” adds King. “Now, a production foreman or control room operator doesn’t have to call the lab to learn what the results were or look at a piece of paper to make a change. That is the real advantage—it makes quality and production data one and the same."

Peters adds, “You have no idea how much of an improvement this is over our old Excel days of data analysis and trending. I went from a Knowledge Manager that no one ever looked at to a flood of wanting more data. Now, when it goes down or when I have to take it offline, it affects our whole system. We’ve become dependent on it pretty quickly. People are expecting the data and expecting it to be correct, and that’s what I like.”

Assisting aromatic deriviatives

Similarly, Mitsubishi Gas Chemical Co. manufactures fine and specialty chemicals from natural gas and petrochemical feedstocks, while its Mizushima plant in Kurashiki City, Okayama, Japan, produces original aromatic derivatives from mixed-xylene. Used in plastic containers and perfumes, these products are obtained from petroleum refining in a continuous process monitored and controlled by Centum VP distributed control system (DCS) from Yokogawa Electric Co., which monitors the process at preset points and adjusts for fluctuations. However, MGC recently discovered that more fluctuations were inexplicably occurring at night, so it enlisted Yokogawa's Process Data Analytics software to help clear up this mystery.

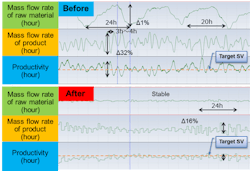

Figure 2: Mitsubishi Gas Chemical Co.'s (MGC) aromatics derivatives plant in Kurashiki City, Okayama, Japan, used Yokogawa's Process Data Analytical software to find a 1% difference in its raw material flow rate that was triggering increased process fluctuations at night. This discovery allowed MGC to stabilize its process, reduce alarms by 20% and reduce manual interventions by 30%. Source: Yokogawa and MGC

MGC section staff leader Tsukasa Taketa and Yokogawa analyst Takeo Ueda report they analyzed the Mizushima plant's production process from raw materials to finished products, including data for thousands of tags stored in its Exaquantum historian and plant information management system. Their search revealed that day and nighttime variations in ambient temperature and atmospheric pressure were causing a 1% difference in the raw material flow rate, and triggering the increased process fluctuations at night.

"Process Data Analytical software uses the Mahalanobis Taguchi System (MTS), but we also examined the plant's data from the material, machine, 'huMan' method (4M) to find the most appropriate analysis method, and convert the information without missing smaller features," says Ueda. "This analysis was the toughest I'd ever done, but by carefully looking at and sharing this data with Mr. Taketa and members of his production section, we were able to make progress in our analysis."

Thanks to finding the 1% flow rate variance, MGC was able to devise a control scheme for stabilizing its production process at the Mizushima plant, and adjusted the loops controlled by the Centum VP DCS. Taketa and Ueda report this stabilization reduced the plant's alarms by 20%, reduced manual interventions involving manipulated variables (MV) and set variables (SV) by about 30%, and improved overall productivity (Figure 2).

Beyond the usual borders

Just as accelerated abilities allow it to find and solve more subtle problems, data analytics is evolving rapidly enough to surpass its initial parameters, and deliver intelligence in some unexpected places and between some formerly separate functions.

Figure 3: Lundin Norway's Edvard Grieg platform processes oil and gas streams 200 km off Norway's west coast, but many of its functions are managed from the company's offices 600 km away in Oslo with help from Honeywell Forge asset monitoring, management and analytics software, which is also gauging its environmental impact and generating energy monitoring reports that can save $1.23 million per year and reduce CO2 emissions. Source: Honeywell and Lundin Norway

One end user merging disciplines is Lundin Norway AS, which operates its Edvard Grieg platform 200 kilometers (km) off Norway's west coast, where it serves as an oil and gas "field center," processing well streams from nearby Ultsira High fields (Figure 3). However, company engineers and support staff collaborate with its operators to manage the platform from more 600 km away at its facility in Oslo. One jointly applied tool is Honeywell Forge—Enterprise Asset Management software from Honeywell Process Solutions, which enables flexibility, while maintaining productivity. They also perform asset management and use advanced machinery models in Honeywell Forge—Asset Monitor and Predictive Analytics software, which is the asset performance management and data analytics engine in Honeywell’s Enterprise Performance Management software.

In addition, Honeywell's software and support devices connects more than 100 assets on the platform to Lundin’s engineers in Oslo to allow remote monitoring of asset efficiency and impending health issues of the platform’s compressors, pumps, turbines and other equipment. Likewise, Honeywell integrates data from other condition monitoring systems for vibration, switchgear and wells into a unified data display. “We have expert monitoring systems, but it’s impossible to monitor each system simultaneously,” says Stig Pettersen, principle automation engineer for Lundin Norway. “Honeywell's asset software integrates all of our systems, and we’ve been making our KPIs so well that we rarely have to log into the expert systems."

Pettersen reports that Lundin Norway must also measure the environmental impact of its operations to improve its stewardship goals and comply with Norwegian regulations. However, a typical expert system wouldn't be sufficient, so the firm decided to use Honeywell's digital twin functions to quantify how much energy it produces at each generating asset and what's used by each consuming asset, and accomplish full energy accounting and calculate equivalent CO2 emissions. With no added investment, Lundin configured the software to generate real-time, energy-loss reports from the digital twins.

The company's initial energy monitoring system (EMS) report provided an objective measurement of expected and some unexpected loss sources, but it also evaluated the platform’s energy efficiency and associated emissions on demand. Every five minutes, the EMS calculates a running, 24-hour, aggregated value for energy loss, and at the end of each day, it saves the final calculation for presentation in a daily time-series trend. The report also itemizes the platform's 70-megawatt (MW) generating capacity relative to 11 primary energy consumers and six smaller consumers. Using daily loss averages, Lundin identifies losses that can be reduced, including an average of $580 per day for every percent of reduced power generation. Because the platform runs 24/7, a 5.5% reduction translates to $1.23 million per year in potential energy savings and 5.6 KMT per year of potentially reduced CO2 emissions.

“Most losses are operational, which we can more readily control with process improvement and optimization,” concluded Pettersen. “Design losses are harder to address, and it’s not feasible to eliminate all losses. However, since we now have a rolling calculation, we can tell how much, for example, turbine and compressor fouling is costing us. We see both performance and economical effects immediately following wash maintenance.”