Optimizing modern instrumentation maintenance programs

Key highlights:

- Modern transmitters drift very little, and other factors are the bigger problem sources.

- Many I&C programs still allocate excessive time to transmitter calibration, while primary elements and installation issues are neglected.

- Frequent “micro-adjustments” often add errors instead of improving accuracy.

- ISA studies show technicians introduce errors 17% of the time when making adjustments.

In this continuation of our series with Mike Glass, owner, Orion Technical Solutions, we discuss the most common gaps in instrumentation and controls. Glass specializes in instrumentation and automation training and skills assessments and holds ISA-certified automation professional (CAP) and certified control systems technician level III (CCST III) credentials. He has 40 years of instrumentation and controls (I&C) experience across multiple industries.

Mike, in our previous columns, we covered 4-20mA loops and SMART instrumentation technology. For this third piece, I'd like to explore maintenance approaches. What's the biggest issue you're seeing with instrument maintenance programs?

Mike: One of the biggest issues I see is that a major portion of annual I&C labor still focuses on transmitter calibrations with an inadequate portion of time spent on tasks that could add more ROI.

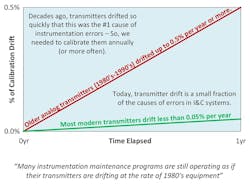

In the 1980s, when transmitters drifted up to 1% per year and caused about 70% of the problems, it made sense to spend that effort on calibrations. Most of today’s installed transmitters drift at a fraction of that rate, and transmitter calibrations only cause about 5% of measurement system problems. A study by ARC Advisory Group found 95% of SMART transmitters showed no significant drift between typical calibration intervals, and more than 70% of actual calibration adjustments provided no operational benefit.

Greg: That's an interesting observation. How has this affected overall measurement system reliability?

Mike: When most I&C programs were established decades ago, transmitter drift was often the largest source of error due to those faster drift rates—so organizations rightly focused heavily on transmitter calibrations. Because drift errors were so dramatic back then, the other measurement errors weren't given the same level of attention as the actual transmitter calibrations.

But now that transmitter errors are so much smaller, those other factors—like the primary elements, installation and tubing issues, current signal leakage, I/O card calibration, and scaling errors—represent much larger percentages of overall measurement uncertainty. However, these other problems are often neglected due to the lingering emphasis on the transmitter calibrations.

You can see by the charts that as technology has advanced, the source of errors has shifted from calibration-oriented parts of the system to the mechanical and physical side. Transmitter calibration issues are a sliver of the overall issues now days, yet primary elements and installation issues are much more significant than in the past.

Another related issue that I have observed during assessments of technicians doing instrument verifications and calibrations is that many don't even check anything beyond transmitter output. They simply simulate the test points and record the output of transmitter, ignoring other (more likely) sources of errors. Poorly detailed verification and calibration procedures and forms magnify the problem. Tunnel vision fixation on the transmitter is a serious problem in most industries.

Greg: I've written about this very topic in my ISA Mentor Q&A "How Often Do Measurements Need to be Calibrated?". The TC drift noted from a 1973 resource is overstated and is more likely about 0.2 oF per year today for best TC. What do you think is driving the disconnect from realizing the more prevalent sources of measurement error?

Mike: In short - institutional inertia - "we've always done it this way." Many organizations simply haven't thought about it. It always amazes me how even during major maintenance department transformations -- the details like this for I&C maintenance repeatedly get completely overlooked.

The ‘annual calibration’ assumption was burned into organizations back when it made sense for older technology, but very few organizations have taken the time to question if it's still necessary. Not only are most companies wasting tons of resources on excessive numbers of calibrations that add little value – they are simultaneously ignoring the much bigger needs that could easily provide much bigger ROI for their resources.

Greg: What sources or studies can organizations use to justify making changes to their I&C maintenance plans and procedures?

Mike: ISA-RP105.00.01 "Management of Instrumentation Calibration and Maintenance Programs" provides excellent guidance on performing necessary calculations and justifications for calibration frequency adjustments, and for creating more rational maintenance programs, but many organizations haven't implemented its recommendations, and very few I&C professionals are even aware of the standard or associated reasoning or technological changes.

Greg: You've mentioned "envelope assurance." Can you explain that concept?

Mike: Envelope assurance is understanding what accuracy is necessary for effective process operation, then simply ensuring instruments remain within that performance envelope - versus striving for a perfect alignment at frequent intervals. In most cases the objective of calibration programs should be to ensure that instruments are within the prescribed tolerance vs trying to constantly keep them tweaked to some theoretical bullseye target.

Many technicians perform what I call 'micro-calibration adjustments' rather than making use of the full specified tolerances. This practice is problematic for several reasons; calibration equipment is often less accurate than modern transmitters; micro-adjustments hamper tracking of the real drift rates; most processes have natural variability far exceeding these tiny adjustments; and significantly, an ISA study found technicians introduce errors 17% of the time when making calibration adjustments (but that’s a whole additional topic)!

Greg: What about making small calibration adjustments?

Mike: This is a common misconception in the I&C field, stemming from the days when frequent calibrations were needed and were indeed beneficial due to the magnitude of errors, and the fact that field instrumentation drifted faster than the test equipment back then.

The instruments typically don't drift enough to justify 'readjustments' more than once every 5-10 years -- so if an adjustment is required sooner than that, it is likely representative of other problems and should be investigated vs tweaking them at every opportunity (which is often what happens).

I have observed many technicians (even senior, highly experienced ones) attempt to calibrate an instrument that was off by as little as 0.1% or so - claiming to be 'Perfectionists' but failing to understand that they are likely just injecting the small but inherent errors of their test equipment into the instrument.

In our first article on 4-20mA loops, I described a situation where technicians repeatedly adjusted a certain transmitter that was showing discrepancies between HMI readings and transmitter output. The real culprit wasn't transmitter drift – it was a varying error in the signal path due to moisture in a junction box that was causing varying leakage currents, resulting in a varying value on the HMI. Once they finally addressed the real problem, the transmitter remained stable for years without any further adjustments.

This scenario highlights a critical question I&C personnel should ask in a scenario where a calibration adjustment is required: Why would one transmitter of a particular model drift significantly faster than identical units in similar service? Or why would a transmitter drift much faster than the established and proven stats and specifications? Such anomalies typically point to problems elsewhere in the measurement system – problems that should be investigated and resolved.

Here are some of the reasons instruments shouldn't be adjusted unless outside the prescribed recalibration tolerance:

- Most field calibration equipment is less accurate and has lower stability specifications than typical modern transmitters (sometimes by a factor of up to 2-4x or more depending on the equipment used).

- It is better to simply watch and track those errors over time. This allows for powerful analysis of drift rate and other issues. This data can be incredibly helpful in the long term if it is simply recorded and analyzed.

- Another common mistake I observe is that technicians fail to wait for the transmitter output to fully stabilize before taking readings or adjusting. This is another whole huge topic in and of itself but suffice it to say that a significant percentage of technicians are unaware of how long they should wait (based on damping settings) before taking readings or adjusting. The damping misconceptions are rampant and very problematic.

- Statistically speaking, technicians are more likely to introduce errors by going in and adjusting than the instrument is to require a calibration adjustment… So, adjusting when they are not really required is not helpful.

- Anytime we adjust I&C systems, there is a chance of errors or mistakes. An ISA study found that technicians introduce errors 17% of the time when making calibration adjustments… That lines up with what I am seeing when I observe (both young and old) technicians performing calibration work – and it should be a serious cause for concern to anyone charged with I&C maintenance programs.

Admittedly there are situations where a transmitter or instrument should be maintained as accurately as possible and where frequent micro-adjustments are justified – but those cases are rare and are usually guided by regulatory requirements.

Greg: You've mentioned that maintenance resources are often misdirected for instrumentation. Explain your thoughts on that.

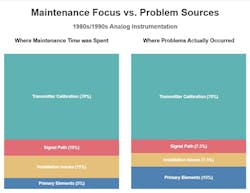

Mike: The data is quite revealing. Much of the time spent on instrument calibration is still being focused on the transmitter calibration -- but extraordinarily little attention is given to the bigger sources of problems.

It used to be the other way around! Back when instruments drifted up to 1% per year, the transmitter caused 70% of the problems – so it made sense to allocate so much I&C labor on transmitter calibrations, and the allocation of time was somewhat proportionate to the amount of errors caused by each part of the overall system. The figure below shows the comparison of where maintenance time was spent compared to where the problems were back in the days when most I&C programs (and most of the remaining skillset) were established. Notice that the maintenance time lined up closely with where problems occurred.

But… technology has changed dramatically, and the old myths and assumptions of many instrumentation 'traditions' are no longer valid. Most I&C maintenance programs need to take a close look at where they are spending their time and reevaluate from the bottom up.

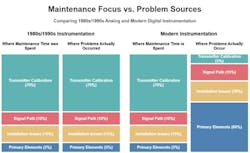

For comparison, the figure below shows a comparison of 80’s-90’s time vs cause of problems bar graphs (above) compared to the time vs cause of problems bar graphs for modern equipment (below).

Notice the conflicting ratios for modern time spent vs problems occurring:

- Transmitter Calibration (14:1 ratio of time spent to problems occurring)

- Primary Elements (1:12 ratio of time spent on problems occurring)

It is interesting to note that time is spent in each area is the same as it was in the 80's and 90's. This is such a clear example of institutional inertia and failure to pay attention to changes in technology.

As the illustration shows, the problems have shifted to where transmitter calibrations are now only a small portion of the problems. Instrumentation resources are being misallocated.

One would think that maintenance initiatives such as RCM (Reliability Centered Maintenance) or RBM (Risk Based Maintenance), Six Sigma, and others would have addressed these issues. But those initiatives usually overlook the I&C realm (completely) and have missed this low hanging fruit for decades at most sites.

I think the reason this gets overlooked is that the maintenance program experts who are implementing these programs, simply focus on the parts they can understand such as mechanical systems, policies, and general operations – but fail to include or get help from the I&C talent who might be able to apply those programs to improve the mystical, magical realm of I&C. All the programs mentioned above could (and should) be able to resolve the issues we are talking about from within their existing frameworks – but they must utilize the right expertise to accomplish it.

Get your subscription to Control's tri-weekly newsletter.

Greg: You've mentioned a "walk-by" inspection check that you suggest as a partial replacement for some of the excessive calibration procedures being performed. Please elaborate on that concept.

Mike: Many instrumentation issues stem from signal path errors (including analog I/O calibration and scaling problems) as well as installation issues, primary element problems, and sensor faults. For many of these issues, frequent walk-by inspections & spot checks offer significant value, especially for transmitters with local displays—which are now common in most modern facilities.

The key benefits of the Walk-by Inspection and Spot-Check procedures are:

- Non-intrusive: Can be performed without permits or time-consuming administrative processes

- Low risk: Doesn't expose the plant or technician to risks of making mistakes or changing settings

- Efficient: A technician can complete 10-20 checks per hour with minimal paperwork

- Proactive: Identifies developing problems well before they impact operations

Here’s a quick summary of a basic walk-by inspection and spot-check:

- Physical inspection:

- This is a quick inspection, ideally guided by a detailed checklist for consistency

- Comparative analysis:

- Compare the instrument reading against another instrument measuring the same variable (compare via HMI if transmitter does not have a display or local readout)

- For loops that have digital data tied into the control system:

- Compare the incoming digital value (PV) to the analog value – this is an excellent way to find emerging problems in the signal path, or other issues such as scaling or analog input calibration problems.

- Many larger facilities already have programmed "deviation monitoring" comparing BPCS to SIS or other protective system measurements

- Display/readout check (if available):

- Look for any alerts, warnings, or abnormal status indicators – this is especially critical on systems that do not have a live digital connection to the instrument.

- Compare the local transmitter PV to the HMI value for same measurement (assuming matching units without intermediate scaling or calculations).

A few 5-minute checks can deliver greater reliability benefits than a full day of routine annual calibration checks—while being way less likely to introduce new problems.

Although we're focusing on transmitters in this discussion, these walk-by checks provide even greater benefits for positioners, actuators, and valves (where all the moving parts are..) – but that is a whole other discussion.

Using these simple checks, a trained technician with a simple checklist can identify most problems long before they cause any serious performance issues, dramatically improving overall reliability through frequent, easy-to-perform inspections.

Greg: What about leveraging diagnostic capabilities in modern instruments?

Mike: In our previous article, we discussed how diagnostic capabilities of SMART instruments are drastically underutilized. Based on the ARC Field Device Management study, modern diagnostics can detect 42-65% of developing issues with HART devices and 58-73% with Foundation Fieldbus, compared to only 8-12% with traditional analog devices.

Yet only 8% of facilities fully implement these capabilities, with 38% not using them at all.

Part of the reason I promote the walk-by inspection is so that sites can at least know the transmitter has an alert or warning since most sites would never know otherwise. Many smart instruments are not tied to any type of network and there is no way to realize a problem exists unless personnel are constantly looking at the displays. The extra few hundred dollars for a meter display option on a transmitter is an easy decision for this reason among many others.

Just paying attention to the transmitter displays as you walk around a plant can help find many emerging problems. I’ll often notice a transmitter that has an alert showing on the display (which may have been there for weeks or months or longer). Sometimes it is just a minor alert or insignificant, but sometimes they are indicative of more serious problems such as impulse line plugging, failover to a backup RTD, power advisory or loop impedance alerts, etc..

Greg: Many facilities worry about regulatory compliance when extending calibration intervals. How do you address that?

Mike: Most regulations don't specify fixed intervals - they require a documented, justified program. I point clients to ISA-RP105.00.01 as the best pathway for most of what we have discussed today.

It is usually well worth the time required to write up a request to modify requirements – especially if they are excessive based on newer technology. Most agencies are typically reasonable if the appropriate information and references are provided. ISA-RP105.00.01 is a powerful resource in getting regulatory agencies to allow things to be done a bit smarter or better.

The documentation should include statistical analysis of historical calibration data, manufacturer specifications, process requirements analysis, and risk assessment methodology. When properly documented, regulatory agencies typically approve extended calibration intervals and other requests if they are solidly justified.

Greg: Those are practical insights our readers can implement. Any final thoughts on getting started?

Mike: Start with ISA-RP105.00.01.

Then ensure that person(s) responsible for administering I&C programs are fully fluent with the technology, reasoning, and with standards such as ISA-RP105.00.01. To achieve this properly, a site will need someone who can make sense of that and all the underlying I&C technical details that we have been discussing in the past few articles.

Whoever is going to build the program MUST understand not only the administrative and policy issues -- they ALSO need to fully understand the underlying principles. Many brilliant engineers have had zero training on the details of instrumentation (4-20mA concepts, SMART transmitters, calibration program principles, etc.) and they will need this just as much or more than the technicians. It is often surprising to me how many otherwise brilliant engineers are on the left side of the Dunning-Kruger curve when it comes to Instrumentation & Controls. Most are shocked when they begin working with real equipment and often say the same thing I have heard hundreds of times; “There is a lot more to this than I realized.”

Once the people charged with implementing or revising the I&C maintenance program are adequately trained, the next step should be to train the workforce and include the details of their specific organization. That can sometimes be managed internally if high level personnel are available. But along with covering the basics, it needs to cover the specific changes and reasons for any procedural or program changes so the techs can support it properly (and so they buy into the program). For example, explaining WHY they should not be making ‘micro-adjustments’ to calibrations and how/why to record values and how to calculate errors and how to ensure consistency in calibration records, and so on.

Once personnel are trained and programs are implemented, the data gathered will help guide the way. Listen to the trained personnel for ideas and problems noted and act. In a brief time, an I&C maintenance program can make a substantial difference in plant operations.

Top 10 best decisions by instrumentation engineers

- Stepped thermowells with insertion length at least five times diameter with tip near pipe center.

- Spring loaded sheathed thermocouple or RTD sensors.

- Temperature transmitters mounted on thermowell.

- Solids free liquid and gas velocity at thermowell tip at least 5 and 50 fps, respectively.

- No impulse lines by direct mounting of pressure transmitters wherever possible.

- Radar level, magmeters, and Coriolis meters wherever possible.

- High performance glass electrodes with removable double junction reference electrodes.

- Electrode tip near pipe center with 5 to 10 fps to minimize coating and response time.

- Three electrodes in same pipeline with middle signal selection.

- Globe valves or splined shaft rotary valves with low seat and seal fraction, respectively and with pneumatic actuator, smart positioner, booster on positioner with partially open bypass valve on positioner outlet if needed, and low friction loosely tightened packing.

About the Author

Greg McMillan

Columnist

Greg K. McMillan captures the wisdom of talented leaders in process control and adds his perspective based on more than 50 years of experience, cartoons by Ted Williams and Top 10 lists.

Leaders relevant to this article: