Object Architectures in an Increasingly Services-Oriented World

By Paul Miller, Contributing Editor

Distributed objects work well within a common technology and network environment.

In the beginning, we had simple data tags, such as pressure, level, flow or temperature process variables. With tag data, dedicated connections need to be created to get the data from the source (typically a PLC or process controller) to the consumer (typically an HMI, alarming, trending or historian application).

As the concepts of object-oriented programming developed over time, tags evolved into objects (See sidebars at the end of this article). Objects feature multiple attributes, including both behavior and state, in addition to the process-variable data. By definition, objects also display inheritance characteristics that allow user to replicate and modify them for re-use. Standards such as Microsoft's COM (Component Object Model) enabled connections between different objects within the same computer or computing platform.

According to Mike Brooks, Staff Technologist in Global Manufacturing at Chevron Refining in San Ramone, Calif., “Object technology in automation has been around for quite awhile. We developed some earlier object technologies back in the mid 1990s while I was at Object Automation and later at IndX, a company that I founded that subsequently was acquired by Siemens. IndX was built on a distributed object framework. This was pretty innovative object technology at the time built on an information model based on objects and classes of objects. We had objects that represented physical objects and we had class-based views against those objects. For example, if we had a compressor object, we could put six different views on the object so the planner or operator or maintenance guy could see the object.”

Delta V system in Petrobras control room.

Photo courtesy of Emerson

“While objects are fine,” said Brooks, “once you get the object structure built, and especially if you’re going across computers, with objects, you end up with a very fragile infrastructure that binds the work processes within the IT underpinnings. If you connect one database to another, it’s bound, and if you want to change it you have to get a whole bunch of IT guys to pull this part and rearrange it to put it all back together. COM and CORBA were technologies for distributed objects. These are now being supplanted by services-based technologies and for good reasons.”

According to Dave Hardin, a system architect at Invensys, “Object-oriented (OO) technology and architectures have been around in software world for quite some time. The concepts were becoming well-established in the software industry by the early 90s, and most modern software development embraces OO technology and related modeling languages such as UML (Unified Modeling Language) to describe the structure and behavior of systems. Over time, object concepts have emerged from the internals of application software and become more visible to end users. Some of the names are changing, but the core concepts remain alive and well. Users may not relate to ‘geeky’ software terms, such as ‘subclassing’ and ‘inheritance,’ but they do understand the concept of templates, deriving templates from base templates, and the concept of object instantiation and deployment. (See the sidebar, "Object Templates Simplify Engineering at Santee Cooper Power" at the end of this article) The object paradigm is natural and easy to understand. This speaks well of the ability for OO technology to describe real-world systems in a meaningful way.”

While a big improvement over simple tags, Microsoft’s COM bound the objects to the underlying IT infrastructure, making them both protocol- and platform-dependent and requiring the creation of well-defined and often inflexible object architectures.

According to Hardin, “The success of software object technology lead to the belief that objects should not be constrained by a hardware platform, but instead should be available transparently throughout a network. Why be limited by artificial barriers? This led to the development and widespread use of distributed object technologies.”

ABB IndustrialIT makes extensive use of distributed object technology.

Photo courtesy of ABB

Distributed object technology, based on vendor-developed standards, such as Microsoft's distributed flavor of COM known as DCOM (Distributed Component Object Model) and the Object Management Group's CORBA (Common Object Request Broker Architecture) standard for UNIX platforms, is used in many of today’s advanced industrial automation systems. Distributed object technology simplifies data access within a network. OPC Data Access (DA) is one popular example. Microsoft-based OPC DA servers and clients are used throughout the industry to provide data connectivity between different vendors’ intelligent devices (such as PLCs) and software applications (HMIs, alarm packages, trending packages, historians, etc).

“OPC DA tags as objects probably represent the biggest footprint of DCOM distributed-type architectures in this market space today,” said Stephen Briant, FactoryTalk Services marketing manager at Rockwell Automation in Pittsburgh, Pa.

According to Neil Peterson, Delta V Product Manager for Wireless and Enterprise Integration at Emerson, “Distributed object technology has been the mainstay of process systems integration since the first release of OPC DA. Customers benefited from applications created by different vendors that were easy to integrate using a standard interface, rather than requiring custom programming. The caveat was that it required all integrated applications to be on the same operating system platform. This was OK, since most process systems had standardized on Microsoft Windows as the application platform. However, business systems are deployed across non-Windows platforms, and that has limited the integration of the process and business systems.”

According to Marc Leroux, collaborative production management marketing manager at ABB, “ABB has been delivering object technology and its benefits to our customers for many years; we introduced our flagship object-based System 800xA Extended Automation system in 2004, and before that we delivered these benefits with earlier versions of Industrial IT beginning in 2000. One of the basic concepts of the system is that it is possible to create a base object that is a representation of a real-world object; a pump or a valve for example. The base pump object, for example, already has all of the characteristics of a pump modeled in it. Specific types of pumps can be subclassed from the base pump, inheriting all of its characteristics. Once a specific device is modeled, instances of it can be implemented, exactly as they would be in the real world,” said Leroux.

“Any extension to the object, an ‘aspect’ in ABB terminology, which is added to the object, can be immediately made available throughout the system. For example, a maintenance manual for a specific type of pump is attached to the pump object; it is then available to all instances of the pump. Another example would be adding a new piece of software that could monitor and report on certain operational conditions inside of an object, monitoring levels or temperatures inside of a vessel, for example. This can be attached to the object at any point, and it immediately becomes available. Of course, these examples are relatively simple. The true benefit is in implementing a complex piece of code, monitoring the overall health of a heat exchanger or a reactor, for example. In an ABB environment, these can be added at any point after the system is implemented, and they become available for reuse.

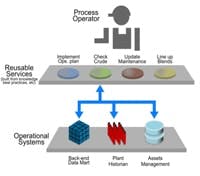

SOA allows decomposition of applications into services.

Source: Chevron Refining

“Another concept in the ABB architecture is that specific data elements, the current flow through a pump, for example, are maintained in one spot and referenced. This eliminates the need to replicate information in multiple spots and ensures the integrity of the information. In real-world terms, this approach greatly reduces engineering costs. By being able to subclass an object, the engineering effort is limited to what is specific to a particular type of object. The behaviors are well-known, reducing validation requirements, but the real benefit comes with making changes to an object. If a decision is made to make a system-wide change, the maximum run time for a specific type of motor that is common throughout the facility, for example, the change can be made in one spot, and all instances of that motor would be automatically updated to reflect this,” said Leroux.

Services-Oriented Architectures Provide Foundation for Cross-Platform Communications.

Certainly, distributed object technology represented a big leap forward for the industry. However, neither DCOM nor CORBA functions well in a cross-platform environment or through network firewalls. To overcome these types of cross-platform interoperability issues, the IT world began to develop the concept of standard “services,” including web services. These services are now making their way into the industrial world where they are helping to break down artificial functional barriers to enable software-based solutions to map to actual work processes more closely.

The idea with services is that if you can define a service that represents an interface between two systems, then the manifestation of the plant itself and the interface are independent of each other,” commented Chevron’s Brooks. “There probably will still be objects within the scheme but instead of communicating from within DCOM or CORBA where the objects are instantiated from the hub, now they’re de-coupled with a service.”

According to Hardin, “Services define the interface and communication mechanisms between objects and other software components. These interfaces and mechanisms become the communication contract to which each system must adhere. Some services, web services for example, support widely used protocols, platforms and systems. Other services support specific communication functionality, such as fast data transfer, strong security, failure resilience or event messaging. The contract forms the basis for interoperability and helps decouple the functions performed by the service from the technology used to implement the service.”

SOA maturity model.

Source: Chevron Refining

“SOA means different things to different people,” said Hardin. “However, the industry is converging on the concept of service ‘contracts’ for interactions between systems. These service contracts provide the core of an SOA. Services provide a loose coupling between applications and objects to minimize the effect of changes within objects.”

According to Kevin Fitzgerald, senior program director at Invensys Process Systems, “A service is a software structure that, rather than just providing data as a form of an API, is actually a self-contained application entity that performs a business function. I like to use the example of a restaurant where the host or hostess, the waiter or waitress, the wine steward and the bus person each provide a discrete “service” that, when combined, results in a satisfactory overall dining experience for the patron. The goal in a services-oriented environment is to decompose the overall business into a series of interoperable and interchangeable services that can be recombined freely as needed to meet changing business requirements. The services approach provides much more operational flexibility and business agility than approaches based on a monolithic IT infrastructure. The big challenge is to design services that have the appropriate degree of granularity; they must be functional enough to add real value, but not so large and program-like that they cannot easily be reused or modified. To do so, business-saavy IT people and IT-saavy business people need to put their heads together. We’re not at that point yet, but will be soon.”

Chevron Is Basing Its IT Infrastructure on Services to Be Able to Better Support Business Processes.

According to Chevron’s Brooks, “We didn’t do much with distributed object technologies with DCOM and CORBA, and we’re now looking to leapfrog these into services. I came to Chevron three years ago to look at what we needed to do strategically for our IT infrastructure for all our refineries the world over. The key space that we want to fill is the space between the business systems and the DCS systems. I call that space the production management space, although some in the industry like to call it MES.

“As we look at the space in the process industries, we have a whole smorgasbord of different applications that fill that space—from maintenance systems to historians to reliability systems to lab systems, the DCS, the planning system, the compliance system and so on—which all came to be owned by various departments within the refinery. Some of these applications were very good, and they worked well for 10 to15 years or so. The problem is that they are owned by different departments, and the overarching business processes don’t just belong to one department,” says Brooks.

“The example I often give is that is that a guy walking around the site,” Brooks explained. “This guy, an outside operator, for example, hears noises coming from a piece of equipment. Could be a compressor. He looks at the instruments, and they indicate an impending failure. What happens then is that the maintenance department wants to take it out before there’s a big failure…but the operations department wants to keep it running because they’re in the middle of a key production run. So what’s the right thing to do? There’s no perfect answer, and the way it get answered right now is usually in the morning meeting, probably by the guy that shouts loudest.

Chevron El Segundo Refinery.

Photo courtesy of Chevron Refining

“What we see with so many initiatives and technologies―real-time, lean, virtualization, etc.―is that they are all artifacts of something higher, and that’s what we’re trying to concentrate on at Chevron. The thing that’s higher, of course, is the work process― something that stems from the whole business process and the business-process modeling, which then drops down into the tasks that need to be done; the interactions between people, the roles and responsibilities that we have. So as we build our next generation of IT infrastructure in this space, we’re looking to use work processes as the key driver so that whenever anyone looks at a screen display or a report, it’s because it’s part of a business process.”

“We want to manage the business process such that all the information that’s required for the collaboration and decision-making at any point in time is automatically inserted into that business process. We believe that using services is the appropriate way to get that done.”

More Vendor Perspectives on SOA

According to Dave Emerson, principal systems architect at Yokogawa’s U.S. Development Center in Carrollton, Texas, “Distributed object technology has been in use in IT and manufacturing for many years. For example, CORBA and DCOM technology each describe themselves as object technology. Recently with the development of web services and service-oriented architectures, a new generation of technologies are replacing the earlier generations.”

“Web services are increasingly being used in manufacturing automation systems, MES and, of course, in ERP systems. The ability of web services to easily connect across firewalls, utilize mainstream information technology and contain complex data structures in XML format provide many advantages. Integrating work order processing from operational and maintenance systems to CMMS/EAM systems is one example. The MIMOSA standards group is working with owner/operators and automation system suppliers to establish pilot projects for this type of application,” Emerson said.

“Another example is integration of production management and business systems. Usually this includes business systems sending production schedules to automation systems, and the automation systems sending back production results. The ISA-95 standard and B2MML (Business To Manufacturing Markup Language) is used in many companies to implement this type of interface.”

“As large, multisite companies move to SOAs at the IT level, it will become critical for them to integrate their manufacturing systems into the architecture. IT infrastructure companies have different offerings in this area, but all are based upon web services and delivering robust infrastructures. At Yokogawa, our intention is for our automation systems to cleanly interface with our customers’ preferred IT infrastructure environment. To do this we are working with multiple IT suppliers and utilizing standards-based web services as our preferred interfaces to exchange data with other systems.”

Chevron Richmond Refinery.

Photo courtesy of Chevron Refining

“Yokogawa is also a strong supporter of the OpenO&M standards. OpenO&M is an umbrella group of different standards (ISA95, WBF, MIMOSA, Open Applications Group and the OPC Foundation). Each individual group is working to develop standards for integrating manufacturing systems using XML and web services. Yokogawa believes that standards-based interfaces are required to provide the lowest possible total cost of ownership for our customers. Standards-based interfaces will enable manufacturers to integrate more easily different manufacturers’ systems as each will be able to “speak” a standards language and avoid the many-to-many point interfaces that using proprietary formats would require,” said Yokogawa’s Emerson.

According to Emerson’s Peterson, “Emerson promotes the platform independence provided by an SOA built on web services as the direction the industry is taking, and we’re continuing to develop web services that provide meaningful information and integration. These services allow customers to integrate enterprise-level applications with the process system more easily and enable greater productivity by tying process and business information together at all levels of the organization. This can push decision making down the management chain, enabling quicker reactions to changes in the market.

“Web services are, by their very nature, self-describing. This allows for easy integration without prior knowledge or distribution of precompiled code. Application developers simply need to know the name of the service and its location on the network to begin immediate integration. Currently vendors are beginning to offer their own custom set of services or custom applications that integrate proprietary services to address specific customer needs. These are very specialized solutions that come at a higher cost of custom development,” said Peterson.

Hal Allen, SCADA specialist at Santee Cooper Power.

Photo by Jim Huff, courtesy of Santee Cooper Power

According to Rockwell’s Briant, “FactoryTalk Services is currently based on DCOM, but we’re moving the platform more toward next-generation technology by exposing objects as web services. This can provide a lot of value for businesses.

“Big benefits for customers include the ability to get the right information, providing users with access to the things they need,” said Briant. “It certainly also reduces engineering costs because you don’t have to duplicate information or create users twice or three times. You can use some of the objects that we support in our services and then link them to a Windows object of a similar type for Windows-based security. From an operations and maintenance perspective, as people come and go from a plant or organization, you can add users, take away users and do that at the IT level without disturbing the plant-level maintenance support people. Some of the appeal is that IT people can understand what’s happening at the automation level.

“SOA will absolutely play an important role in automation. I think the concept of interoperability, or what the IT people would call application integration, is key. From an IT perspective, questions like ‘How do I integrate applications across workflows?’ often come up. This is an expensive item that costs money to knit together applications within a company or across vendor boundaries,” said Briant.

Santee Cooper Power substation.

Photo courtesy of Santee Cooper Power

“Business systems want to have more information about and a role in driving production. More information will be required to pass between the production and business systems than was possible to package up and serve out as a single tag, as was the case with OPC (DA). Part of that will be knitting in the customer, the user, the operator, the receiver, the shipper, all the people that touch that manufacturing activity, and making sure that they package all that up. The goals and objectives of OPC UA fit that model of how we as a community of industrial automation suppliers can expose things at a richer level to be able to answer questions, such as can we do it securely? Can we add users and groups? Can we add a security model that guarantees that those requesting information are authorized to do so? These kinds of questions are starting to come to the forefront,” said Briant.

“The big opportunity here is to make sure that we can decouple the business process from the IT underpinnings,” commented Chevron’s Brooks. “An enterprise service bus can give you a tidier way to do point-to-point integration, but it’s still point-to-point integration. It’s a little bit easier, but it’s not the Holy Grail. We’re looking to go higher than that by using services. You have to consider a services layer through which the domain specialists, the people who really know the business, can build a process workflow independent of the IT underpinnings. We have to do this for eight refineries, so we want common processes that can be shared among the eight refineries, even if the implementation of the IT underpinnings is a little different in each one. We want to be able to put the processes in the same way and then, when it’s dropped inside a system, there it would automatically know how to connect to the external systems,” said Brooks.

Objects vs. TagsSteven Garbrecht, Product Marketing Manager at Wonderware, explains the differences between tag-based systems and object-oriented systems: “From the inception of PC-based HMI and supervisory products, the development of data access, scripting, alarming and data analysis has been based on the concept of tags. While simple and very portable from one project to another, a tag-based environment has the downfall of a flat namespace, with no inherent ability to link elements together into more intelligent structures with built-in relationships and interdependencies. Global changes to a tag database are typically done externally to the development environment, as a text file or in tools like Microsoft Excel, and then re-imported into the application. Re-use in a tag-based system is commonly instituted through dynamic or client-server referencing, which allows a common graphic to be created, and then a script is executed to switch the tags being viewed in run-time. Furthermore, because of the flat structure of the application, changes need to be sought out and analyzed as to the effect on the rest of the application. “Component-based and object-oriented development in the IT world originally referred to tools which aimed to release the developer from mundane, repetitive program tasks, while at the same time maximizing re-use through the development of common components. “As you would expect, these tools are not an exact fit for the industrial environment. For one thing, systems integrators and production engineers are typically not computer programmers. Furthermore, there are some key architectural differences between IT and production automation applications. For example, general information technology applications typically involve database access from non-deterministic, forms-based interfaces that accomplish things like online banking, business reporting, HR management, financial accounting or static information look-up. Conversely, plant intelligence, production management or supervisory control applications involve the accessing of real-time data in PLCs, performing sophisticated calculations to determine flows and production numbers, and displaying this real-time data in graphics-intensive client environments or analysis tools, and also writing to and reading from production and operational related databases. The two environments are different enough to dictate that component object-based tools be built purposely for one setting or the other.” |

Object Templates Simplify Engineering at Santee Cooper Power“The concept of templates has helped me out quite a bit,” commented Hal Allen, SCADA analyst at Santee Cooper Power in Monks Corner, S.C. Allen is currently implementing an InFusion SCADA system from Invensys Process Systems to monitor and manage 56 electrical substations for the state-owned utility. “When you get an InFusion system, you have a lot of devices that are created from the pre-defined analog and digital objects provided with the system. A template can be derived from another template, or an object can be derived from a template, and then you can go back and make wholesale changes to everything that has been derived from that.” “In the initial phase of the project, I created my devices from the generic discrete and analog device templates, so that now I have a hierarchical view of how the devices, breakers for example, work in the field,” said Allen. “Once you have all these devices built as a template, then you can easily create your real-world objects. Say you’ve created a couple of hundred devices, and you realize that you’ve left something out or need to change something, you can go back and make a change in one place and have all the hundreds of objects created from that template updated automatically.” “A good example of a device that I’ve created from the analog device object is a transformer, which is an integral part of a substation. We have transformers at different voltage levels, and some are older than others, but they all have some common attributes. My philosophy was to create a base transformer object template having common transformer attributes, such as a fan or a megawatt value. Then I just modify the template as needed to represent the actual transformer,” said Allen. |

The View from ARCAccording to Bob Mick, vice president of enterprise services at ARC Advisory Services, “CORBA and DCOM provided communications mechanisms and exposed the underlining product architectures and basic objects and data, but that’s changed quite a bit. Certainly, the communications have gotten a bit more general; using services you can access information from a broader range of networks. More than that, the services have gotten more macro-oriented, where they actually do things and are not so data-oriented. With services, you’re not thinking so much about the data as much as moving the data to a new location.” “I believe that services are at a higher level of abstraction than are objects,” said Mick. “All applications are still implemented with objects. That’s not really changed at all; in fact, it’s gotten more so. But what gets exposed between applications is more at a macro- services level than the actual underlying object architecture. “Looking into the future, applications will communicate with each other through services, so they will not need to be so intimately aware of each other’s object architectures. With services, end users also don’t have to be aware of the object structures. For example, if you look at an automation application, you typically deal with tag names and some advanced information associated with those tag names and the functions you can perform on them. That model will continue. However, at a higher level, when you’re dealing with more complex structures, such as a report, a set of information or a schedule, the operation itself becomes your focus. More of the logic actually gets built into the application itself. What goes on behind the scenes doesn’t matter that much. “Data is more important than ever. It’s not like the data goes away; it’s just the way we look at things. Interactions between applications are at a little bit higher level. DCOM and CORBA were better than the proprietary interfaces that preceded them, but with DCOM and CORBA, you still had to write program code, and you still had to know everyone’s interface and worry about how they implemented their interfaces. With services, a lot of these things get standardized, and applications in different environments are using a common technical platform, as opposed to using DCOM in the operations applications and CORBA in the enterprise side.” Another advantage is that security becomes built-in as part of the interface to services to be able to have higher levels of security than with DCOM or CORBA. DCOM relied on the Windows security. With web services, for example, the security has been defined by an entire community of users and vendors. Compare that to DCOM, where security was defined by a single vendor. You probably wouldn’t use web services for real-time process control where millisecond response is required, but they can come in real handy for those auxiliary things such as reporting and general administrative functions, where you’re not so concerned with deterministic performance. Web services are good for getting information from automation systems in to operations management systems, but not for between a controller and a device. This is not to say that special-purpose services can’t be used for more deterministic functions, such as within a control system. Conventional distributed control objects connect between different systems using proprietary techniques. With web services, there are no such things as connections, so you have to build the connection around the services. Web services are more of a query-response interaction than a connection. “Distributed object have been around since the early 90s. For automation systems, connections between objects won’t be moving to services. Distributed objects within a control system won’t be going away because they implement control strategies that involve interaction between instruments and controllers and valves. This is a lot different than trying to pick up a bunch of data and information for reports,” Mick said. |